IEEE Robotic Computing

Our paper was accepted at IEEE Robotic Computing! It’s about learning a robotic agent to pay less attention to accidental appearances in the environment, such as the wall colors.

Current methods get trapped, because they learn to search for the sofa nearby white wall colors. Usually living rooms have white-colored walls. We have funny videos of such traps, when the wall color is different than during training (see Videos for a demo).

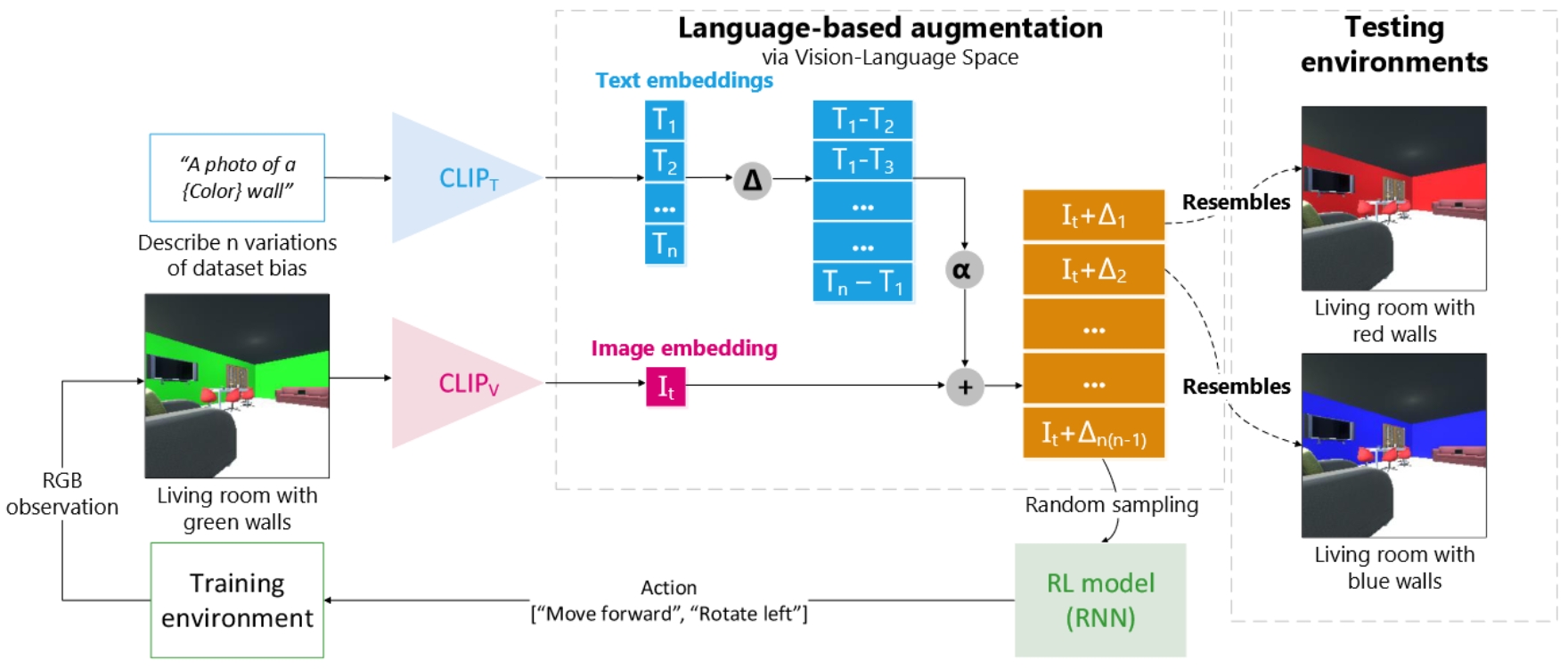

Our method applies an augmentation in language space, saying that a wall color can also be red or blue etc. We add such augmentation vectors to the visual feature vector before it goes into the agent’s model, such that it needs to generalize for such changes.

The reviewers were very positive about this idea and its impact. See Publications for more information, demo and code.