Slot Attention with Tiebreaking

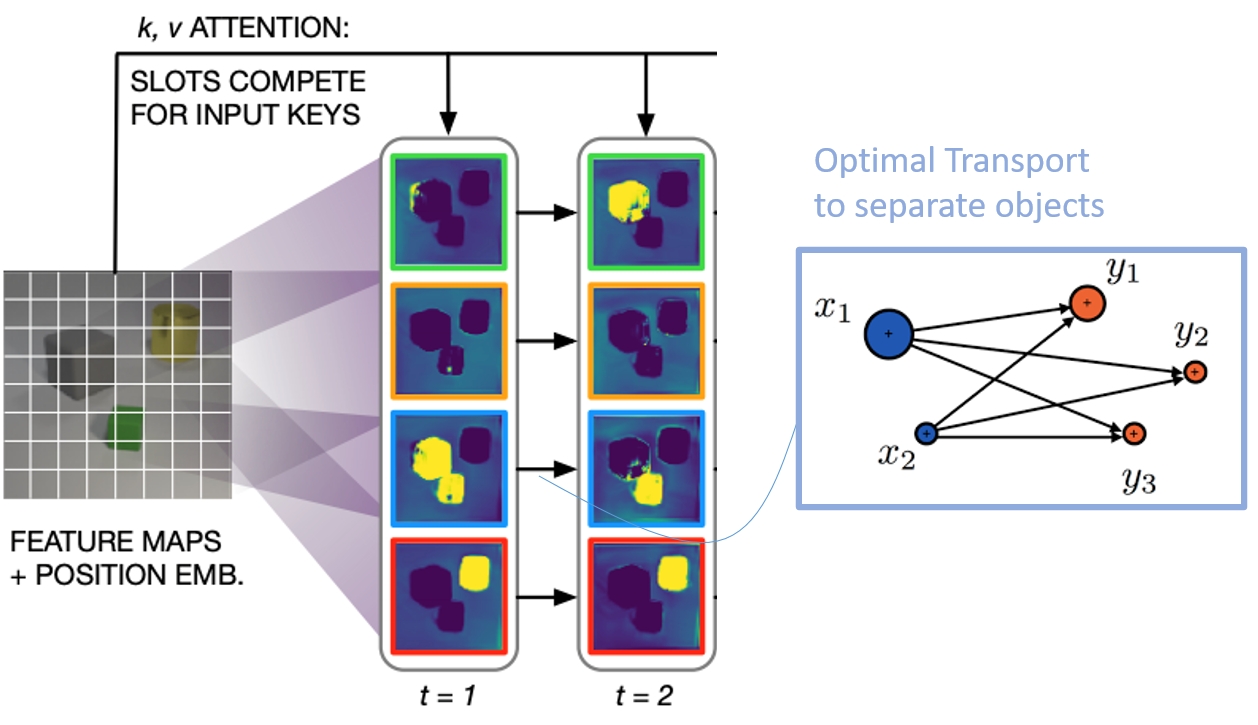

Slot attention is a powerful method for object-centric modeling in images and videos. But it is limited in separating similar objects. We extend it with an attention module based on optimal transport, to improve such tie-breaking.

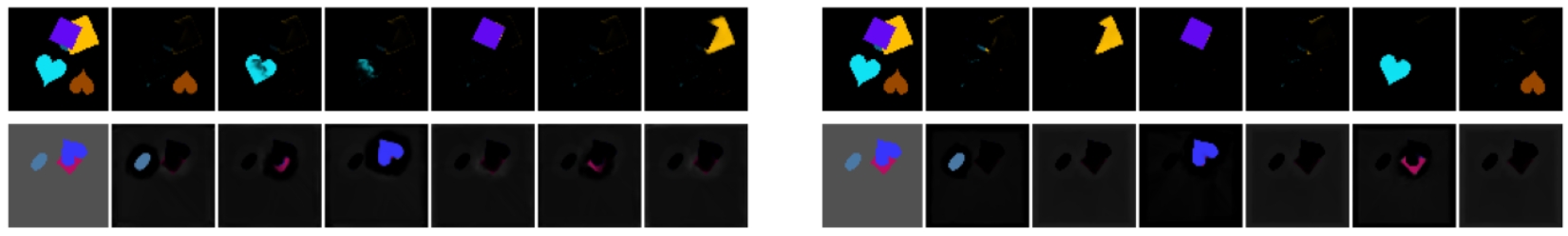

In the illustration below, you can see the original (left) and ours (right) for two input images (rows) which is split into slots. The slots should describe the objects. For the original, the objects break into parts. Our variant keeps objects together.

We propose MESH (Minimize Entropy of Sinkhorn): a cross-attention module that combines the tiebreaking properties of unregularized optimal transport with the speed of regularized optimal transport. We evaluate slot attention using MESH on multiple object-centric learning benchmarks and find significant improvements over slot attention in every setting.

Paper at ICML 2023 (see Publications). Funded by NWO Efficient Deep Learning and TNO Appl.AI’s Scientific Exploration.