Deployable Decision Making in Embodied Systems

In December, I was at the NeurIPS Workshop on Deployable Decision Making in Embodied Systems, to present our paper about modeling uncertainty in deep learning.

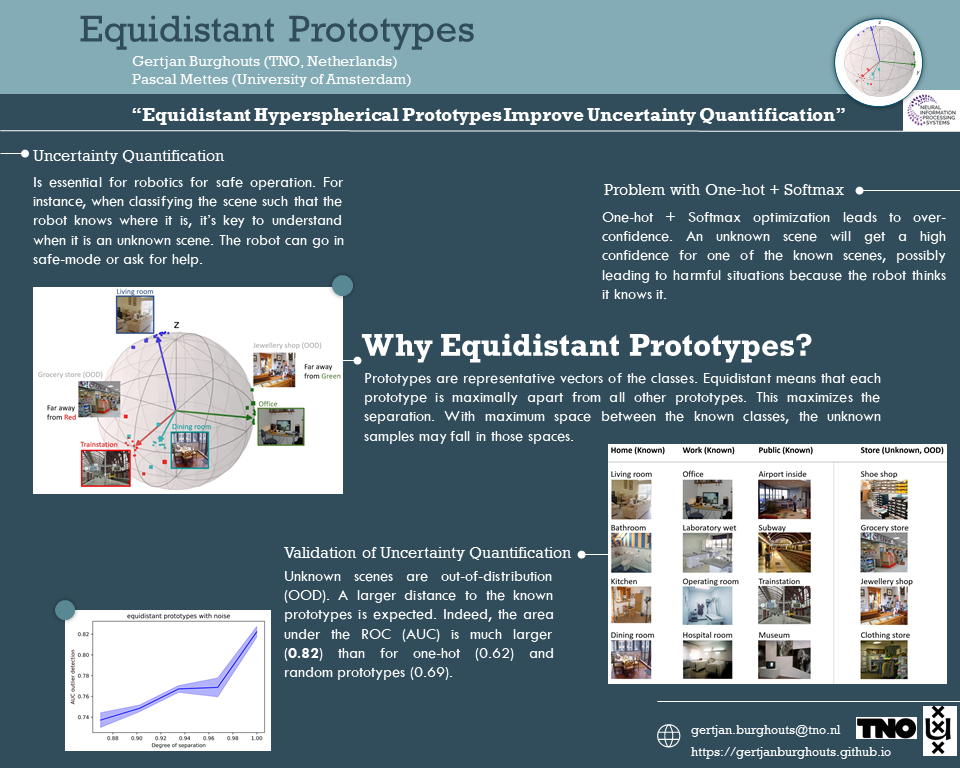

This was our poster:

Great talks by (and panel discussions with) experts in robotics, vision, machine learning (DeepMind, MIT, Columbia, EFPL, Boeing, etc.).

The focus was robustness of systems that have machine learning components. Key questions were: how to safely learn models & how to safely deal with model predictions?

Boeing mentioned that they only consider a machine learning component for the bigger system, if besides its output (e.g., a label with a confidence) it also produces a second output indicating its competence for the current image or object. Interesting thought!

The workshop website is here.