Robot Action Planning by Active Inference

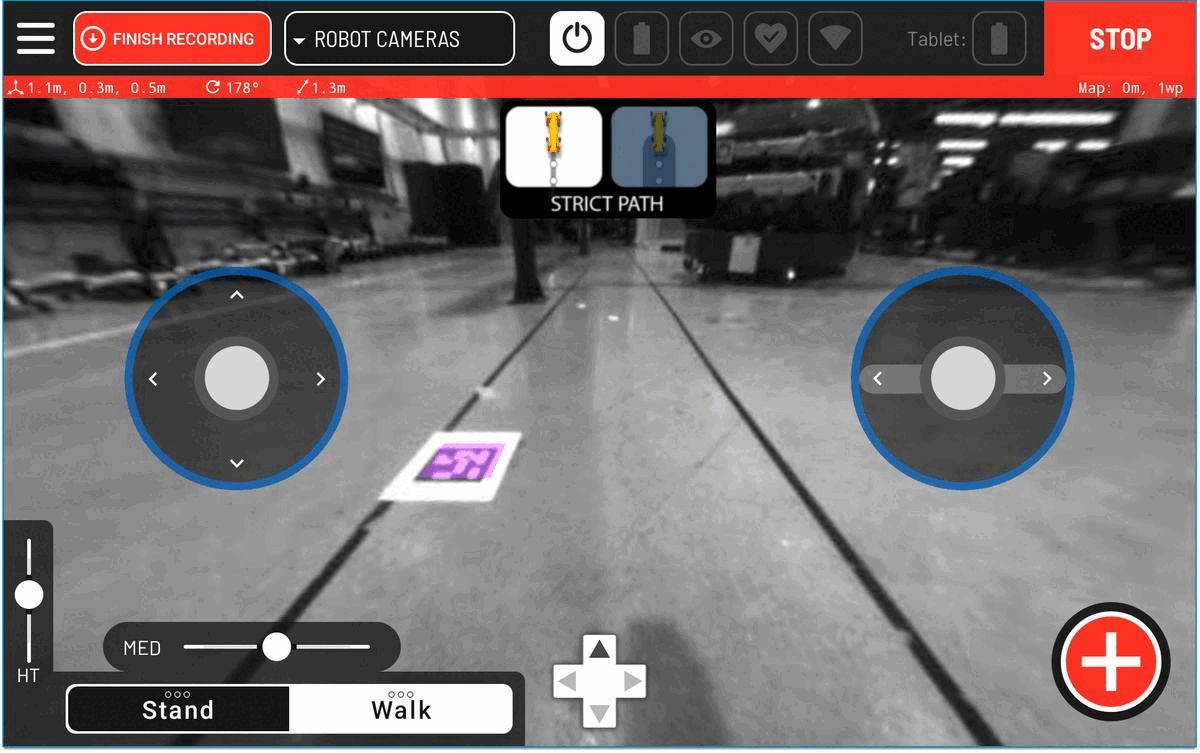

Autonomous inspection robots need to plan ahead while reasoning about several sources of uncertainty in an open world. Such inspections are performed to reduce the uncertainty about the true state of the world, for which it is important that robots manage their sensory actions so that the uncertainty in resulting observations is low.

A recent trend is the use of active inference for this type of planning problems, which is a formalism for acting and perception based on the free energy principle. The applicability of active inference is currently limited to small problems because it typically requires full policy enumeration, and it cannot directly be used to reduce state uncertainty.

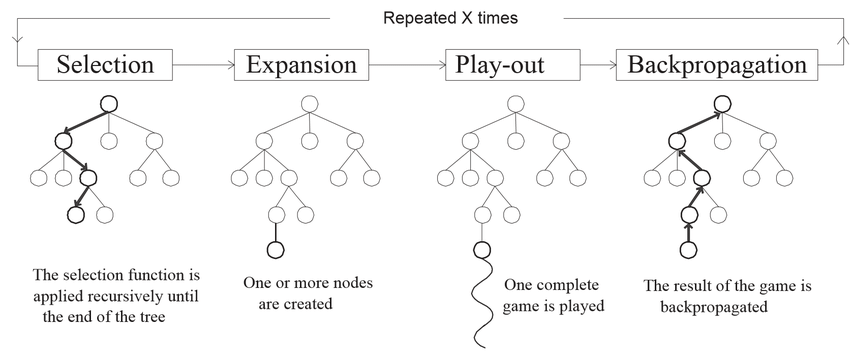

In this paper we show how active inference can be integrated in online planning algorithms for POMDPs, and we show how minimization of state uncertainty can be modeled within the active inference framework. Our planning algorithm is a tailored Monte Carlo Tree Search algorithm which uses free energy as reward signal, where we express reduction of state uncertainty in terms of free energy. In a series of experiments we show that our planning algorithm based on active inference effectively reduces state uncertainty during inspection tasks. Compared to a baseline POMDP algorithm it chooses actions that result in lower uncertainty.