Seeing the Bigger Picture: How Logic Helps AI Understand Scenes

Our paper “Neurosymbolic Inference on Foundation Models for Remote Sensing Text-to-image Retrieval with Complex Queries” was accepted for publication in ACM Transactions on Spatial Algorithms and Systems.

The proposed method is about situation understanding. We reason within images, focusing on the relationships between objects. Relationships between objects are important for interpreting situations. For example: a van next to a boat, farther away from the highway, may indicate an illegal offloading.

We have found that modern multimodal AI models perform rather poorly when it comes to interpreting such relationships between objects—especially when the relationships become more complex and involve multiple entities; for example: A is close to B and C, while B and C are aligned one behind the other, and C is of the same type as D.

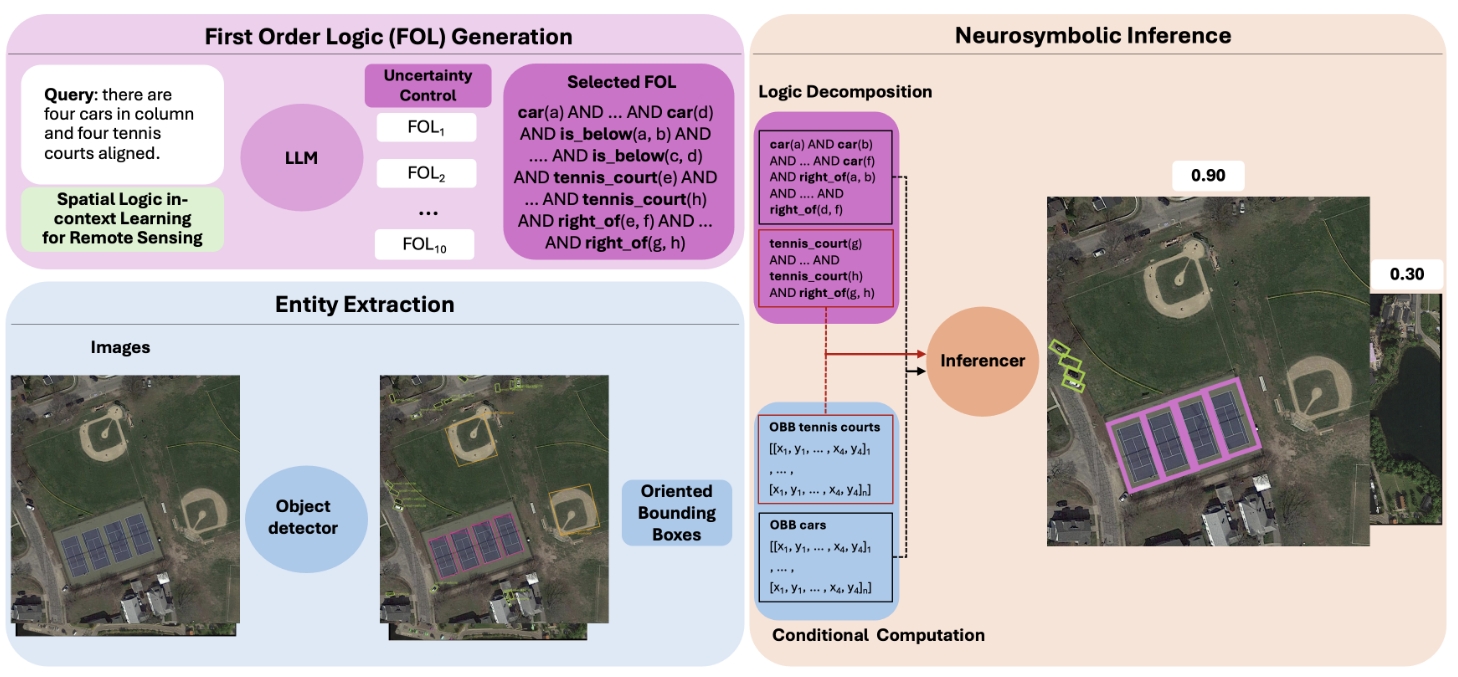

That is why we take a different approach: we use logic. With first-order logic (FOL), you can precisely define the relationships of interest. We interpret this logic through neurosymbolic AI, which we place on top of modern AI. This layer combines the information extracted from images about the objects with the logic describing the interesting relationships.

Here is the key part: the user asks questions to the system in natural language. This textual query is translated into FOL by an LLM that we have specialized using in-context learning. Each image can then be analyzed to determine whether a relational pattern matching the user’s query is present. You can see this in the following figure: