Weighting evidence by thrustworthiness

Our aim is to increase the autonomy of the SPOT robot. Our research covers perception, planning and self management. For perception, one of the challenges is to understand objects, even when they are novel or not seen before. Novel objects are detected by recognizing and combining their parts.

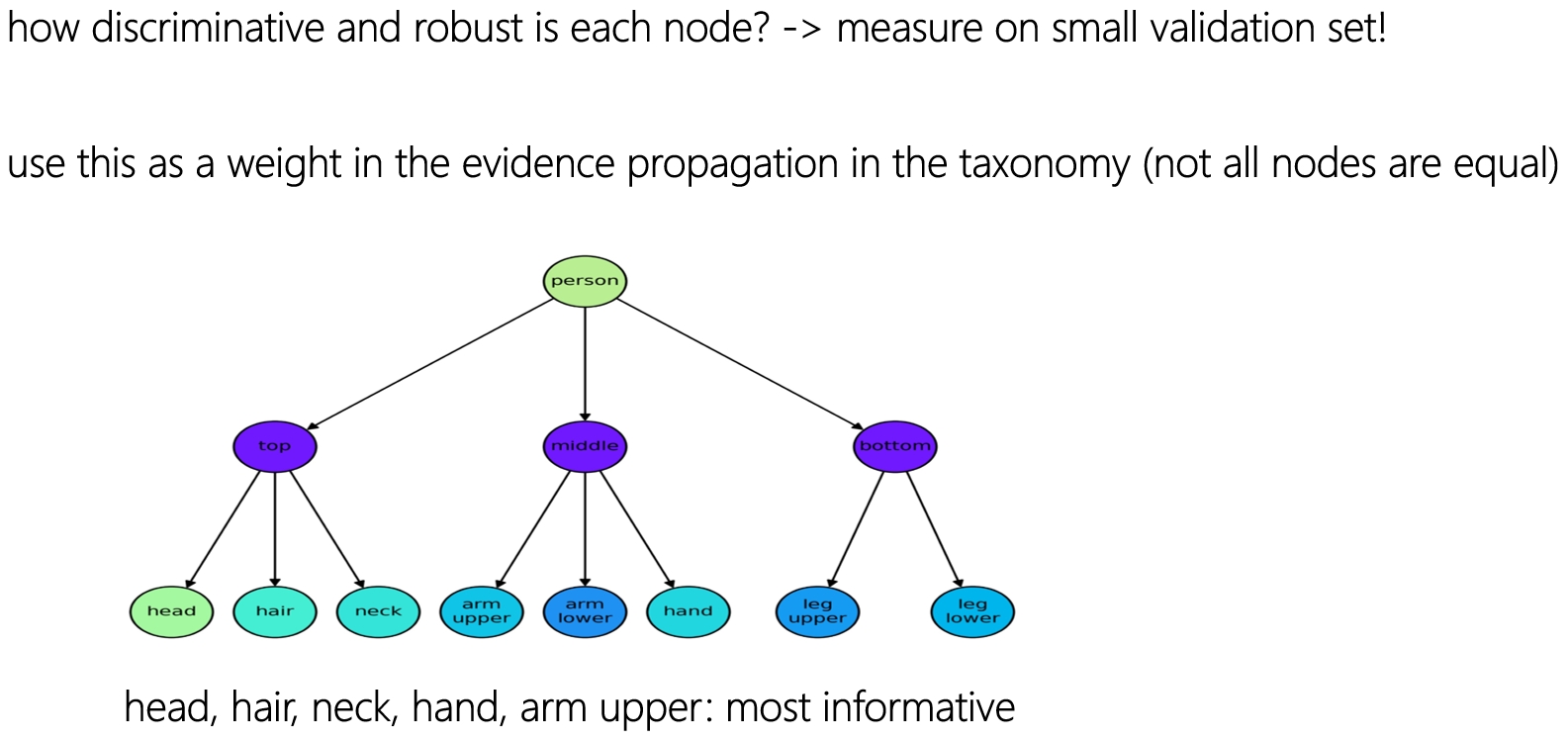

But not all parts are equally discriminative or robust. This post is about combining evidence in a more principled way, by taking the evidence for each part into account.

The coloring of the nodes in the part-hierarchy shows that some parts are more thrustworthy (e.g., head) than others (e.g., lower arm).

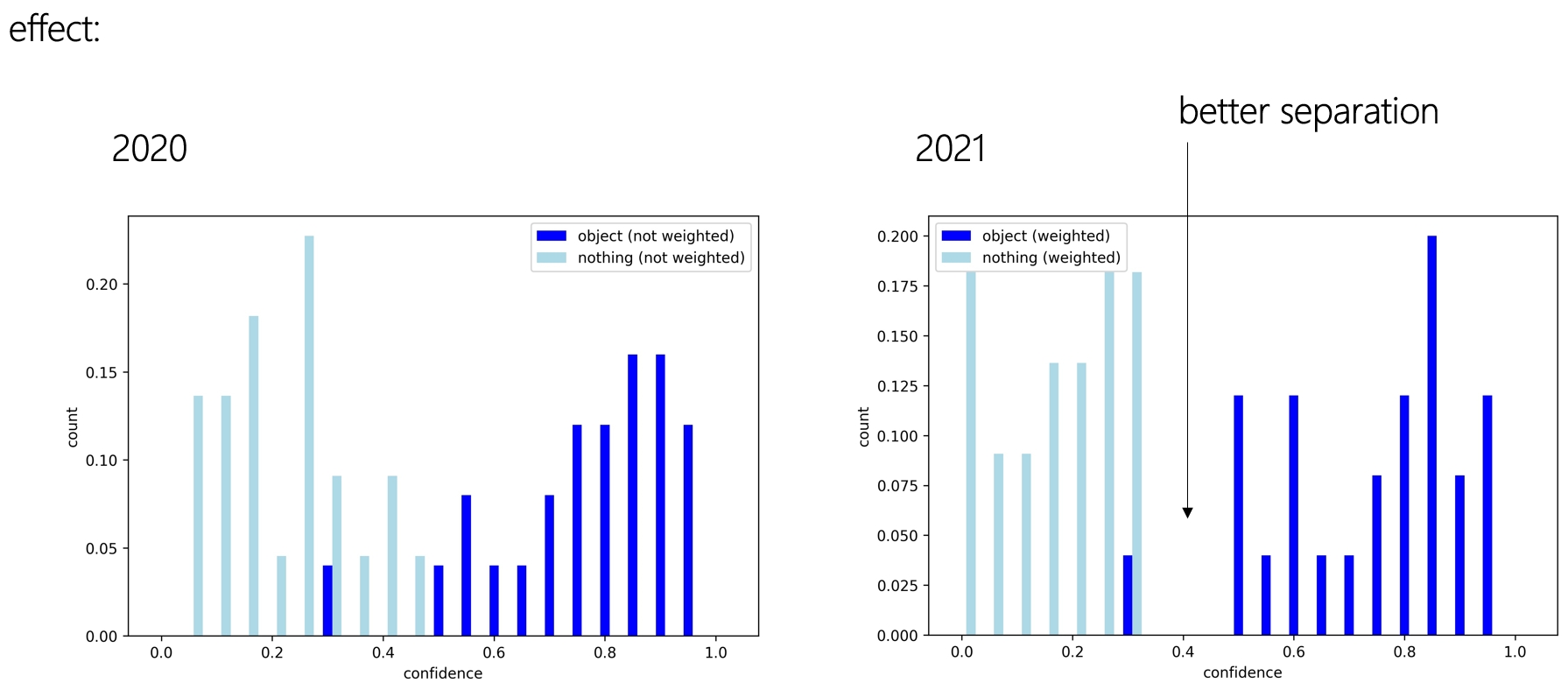

A high confidence somewhere in the taxonomy, propagated too much to the overall confidence. Even if that part of the taxonomy was considered not that trustworthy. A proper weighting of evidence was needed. Ideally, by taking into account the trustworthiness of each bit of evidence. This indeed leads to better predictions than the previous model, which was sometimes over-confident. The histograms of its confidences show that the new model better predicts when the object is indeed present (higher confidence) or not (low confidence).

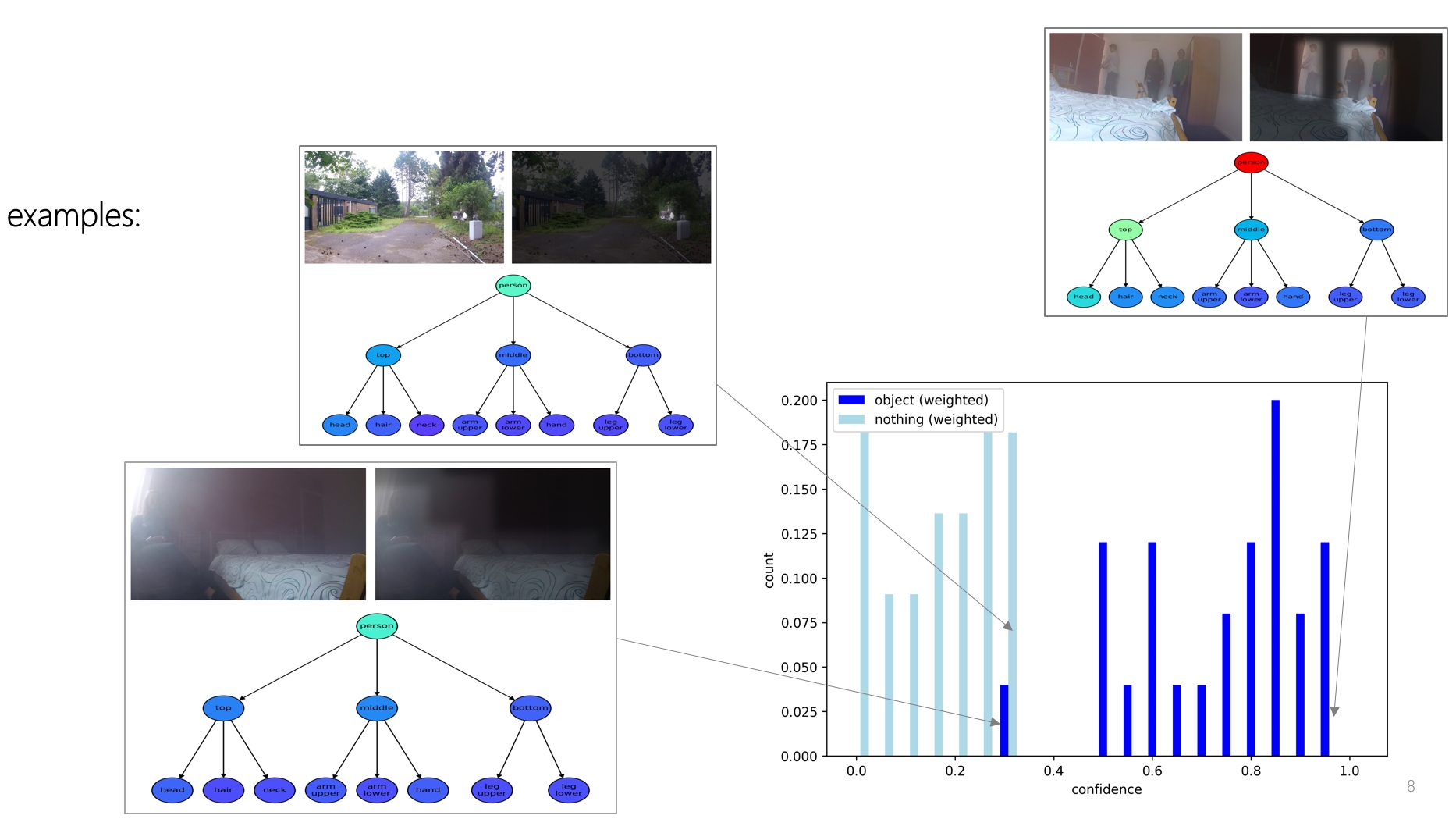

Example predictions are shown below,

This research is supported by the project Appl.AI SNOW 2021.