Probabilistic Logical Programming

Understanding situations and scenes, often requires relational reasoning. For instance, a situation is dangerous because the cyclist is getting very close to the car.

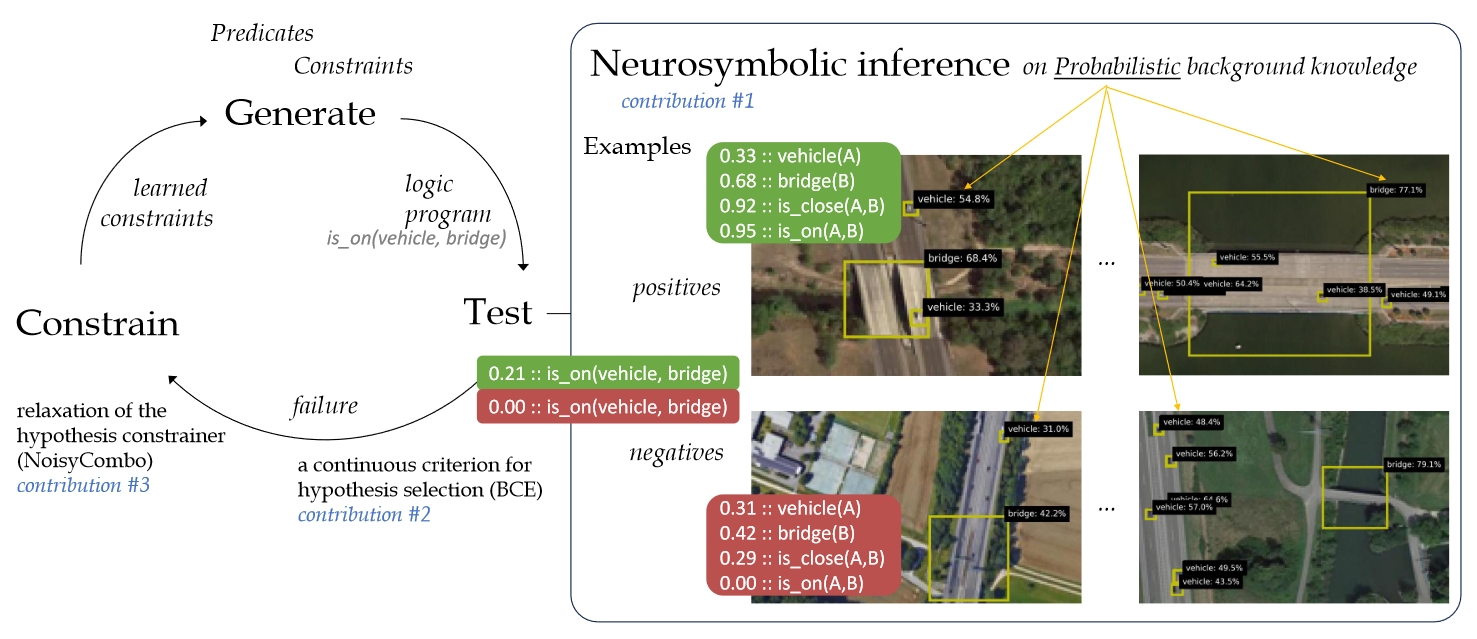

Relational reasoning can be done by inductive logic programming (ILP). A problem is that many ILP methods are incapable of dealing with perceptual flaws and probabilities, while many real-world observations are inherently uncertain.

We propose Propper, which extends ILP with a neurosymbolic inference, to deal with perceptual uncertainty and errors. We introduce a continuous criterion for hypothesis selection and a relaxation of the hypothesis constrainer.

For relational patterns in noisy images, Propper can learn effective logical programs from as few as 8 examples. It outperforms binary ILP and statistical models such as a Graph Neural Network.

Accepted at the International Conference on Logic Programming, 2024.