Self-Guided Diffusion Models @ CVPR 2023

There is a revolution going on in the field of image generation by a new type of models called diffusion models. Examples are Dall-E and StableDiffusion. It was shown that generated images get better when guiding the diffusion model, e.g., with a textual description or semantic token from another classifier. We were curious whether we can guide them also with a self-supervised learning signal, which is beneficial because it requires no annotation effort for training of the model.

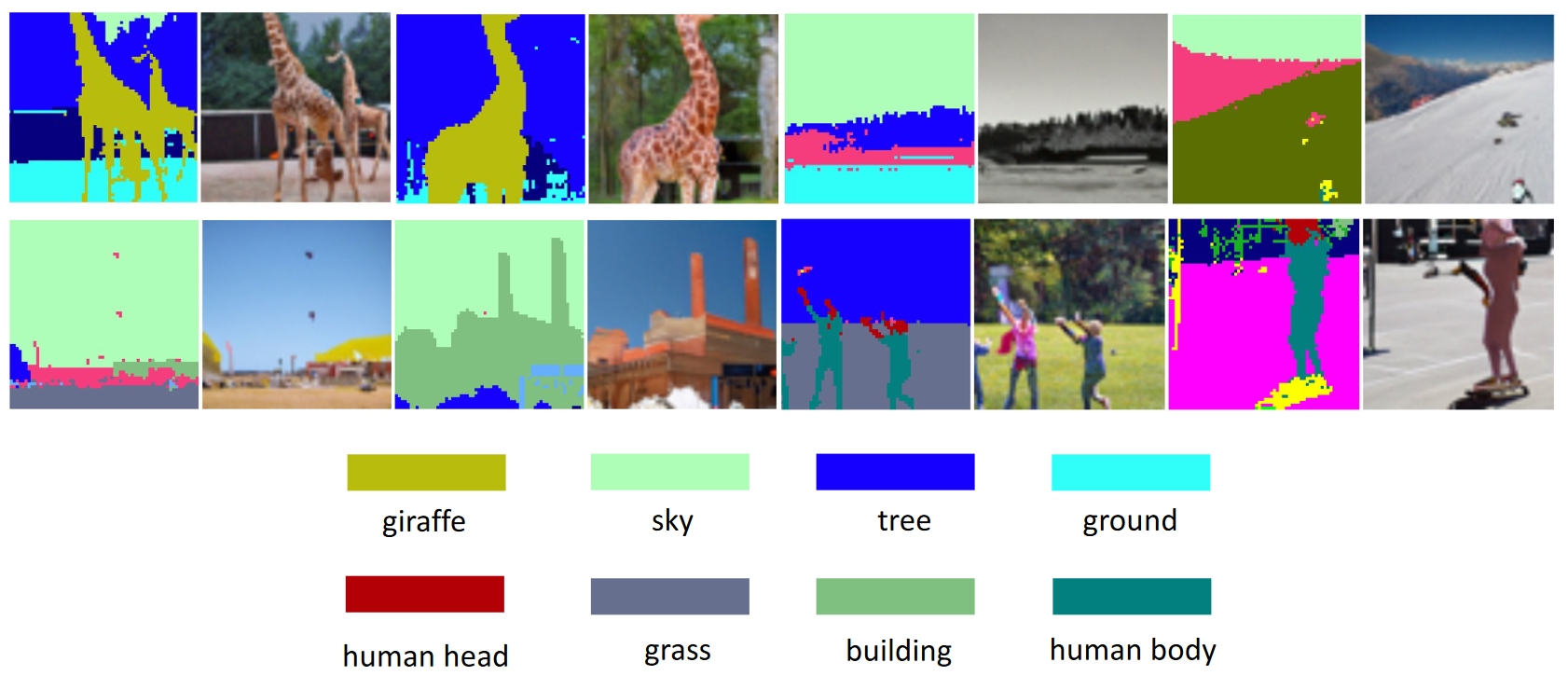

We use self-learned bounding boxes from another method (LOST, BMVC 2022) to serve as guidance for the generation. That works nicely! It creates images with similar semantic content, see the illustration for some example results.

The paper was accepted at CVPR 2023. A collaboration with University of Amsterdam, with PhD student David Zhang, professor Cees Snoek and others. Funded by NWO Efficient Deep Learning and TNO Appl.AI’s Scientific Exploration.