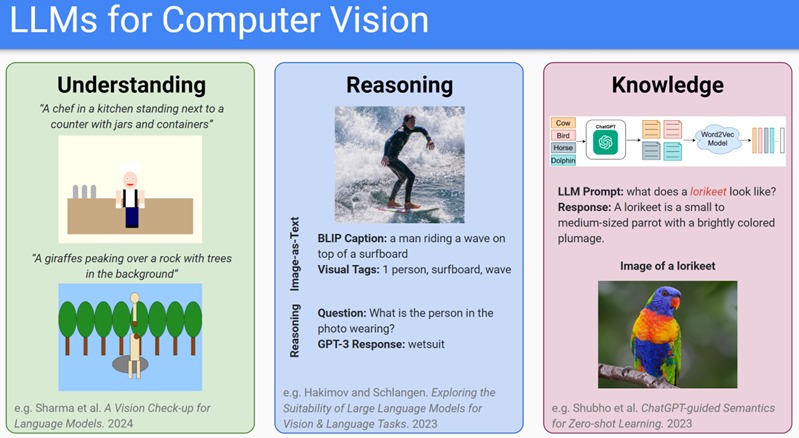

LLMs for Computer Vision

Many types of knowledge can be extracted from Large Language Models (LLMs), but do they also know about the visual world around us? Yes, they do! LLMs can tell us about visual properties of animals, for instance. Even fine-grained properties such as specific patterns and colors.

We exploited this and trained a model for animal classification without human labeling of these properties.

We just took the LLMs answers as groundtruth, instead of human annotation.

Interestingly, it works just as well as human annotations.

The research is done by Jona, our student, who is also going on an ELLIS exchange with the University of Copenhagen with the renowned professor Serge Belongie.